Evaluating Machine Learning Models Ppt

In the ever-evolving landscape of machine learning, evaluating models is a crucial step in ensuring their efficacy and reliability. This article delves into the intricacies of evaluating machine learning models, providing a comprehensive overview of the techniques, metrics, and best practices involved.

Introduction: Setting the Stage

Machine learning models play a pivotal role in various domains, from image recognition and natural language processing to predictive analytics and recommendation systems. However, without proper evaluation, it’s impossible to gauge the performance and reliability of these models. Evaluation not only helps identify strengths and weaknesses but also guides the iterative process of model improvement.

Key Metrics for Evaluating Machine Learning Models

To assess the performance of machine learning models, several metrics are employed. These metrics provide quantitative measures of a model’s accuracy, precision, recall, and overall effectiveness.

Accuracy

Accuracy is one of the most fundamental metrics, representing the proportion of correct predictions made by the model. It’s calculated as the ratio of true positives and true negatives to the total number of instances.

Precision and Recall

Precision measures the fraction of positive predictions that are truly positive, while recall measures the fraction of actual positive instances that are correctly identified. These metrics are particularly useful in scenarios where false positives or false negatives have different implications.

F1 Score

The F1 score is the harmonic mean of precision and recall, providing a single metric that balances both measures. It’s widely used when false positives and false negatives are equally undesirable.

Other Metrics

Depending on the problem domain and specific requirements, additional metrics such as Area Under the Curve (AUC), Mean Squared Error (MSE), and Mean Absolute Error (MAE) may be employed.

Comparing Machine Learning Models: Evaluation Techniques

To compare the performance of multiple machine learning models, several evaluation techniques are available:

- Cross-Validation: This technique involves partitioning the data into subsets, training the model on one subset, and evaluating its performance on the remaining subset(s). This process is repeated multiple times, and the results are averaged, providing a more robust estimate of model performance.

- Bootstrapping: Similar to cross-validation, bootstrapping involves creating multiple subsets of the data by sampling with replacement. Models are trained and evaluated on these subsets, and the results are combined to estimate model performance.

- Receiver Operating Characteristic (ROC) Curves: ROC curves provide a visual representation of the trade-off between true positive rate and false positive rate. They are particularly useful in binary classification problems and can help select appropriate threshold values.

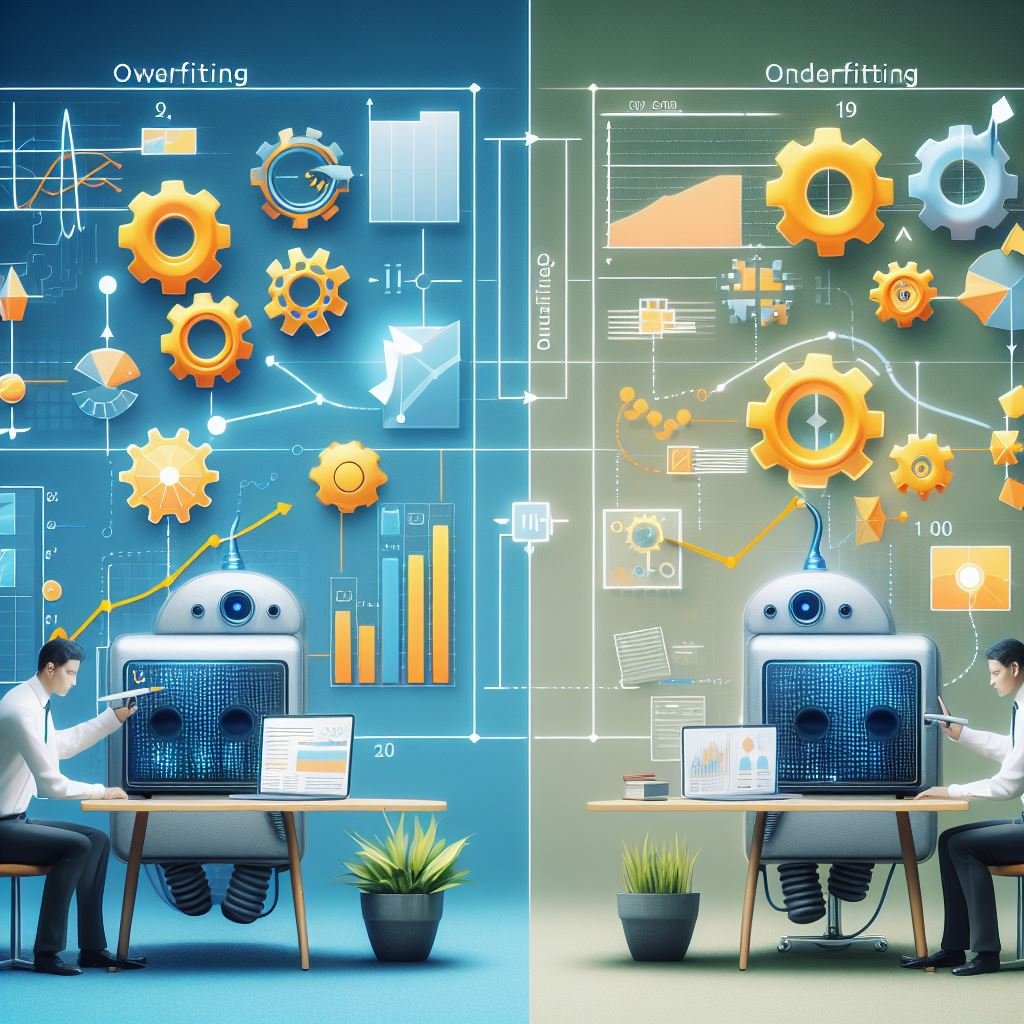

Overfitting vs. Underfitting: Evaluating Machine Learning Model Performance

Overfitting and underfitting are two common issues that can arise during the training of machine learning models.

- Overfitting: When a model is too complex and captures noise or irrelevant patterns in the training data, it fails to generalize well to unseen data. Overfitting can lead to high training accuracy but poor performance on new instances.

- Underfitting: Conversely, underfitting occurs when a model is too simple and fails to capture the underlying patterns in the data. This results in poor performance on both training and test data.

Evaluating machine learning models involves striking a balance between overfitting and underfitting, ensuring that the model is complex enough to capture relevant patterns while remaining generalizable to new data.

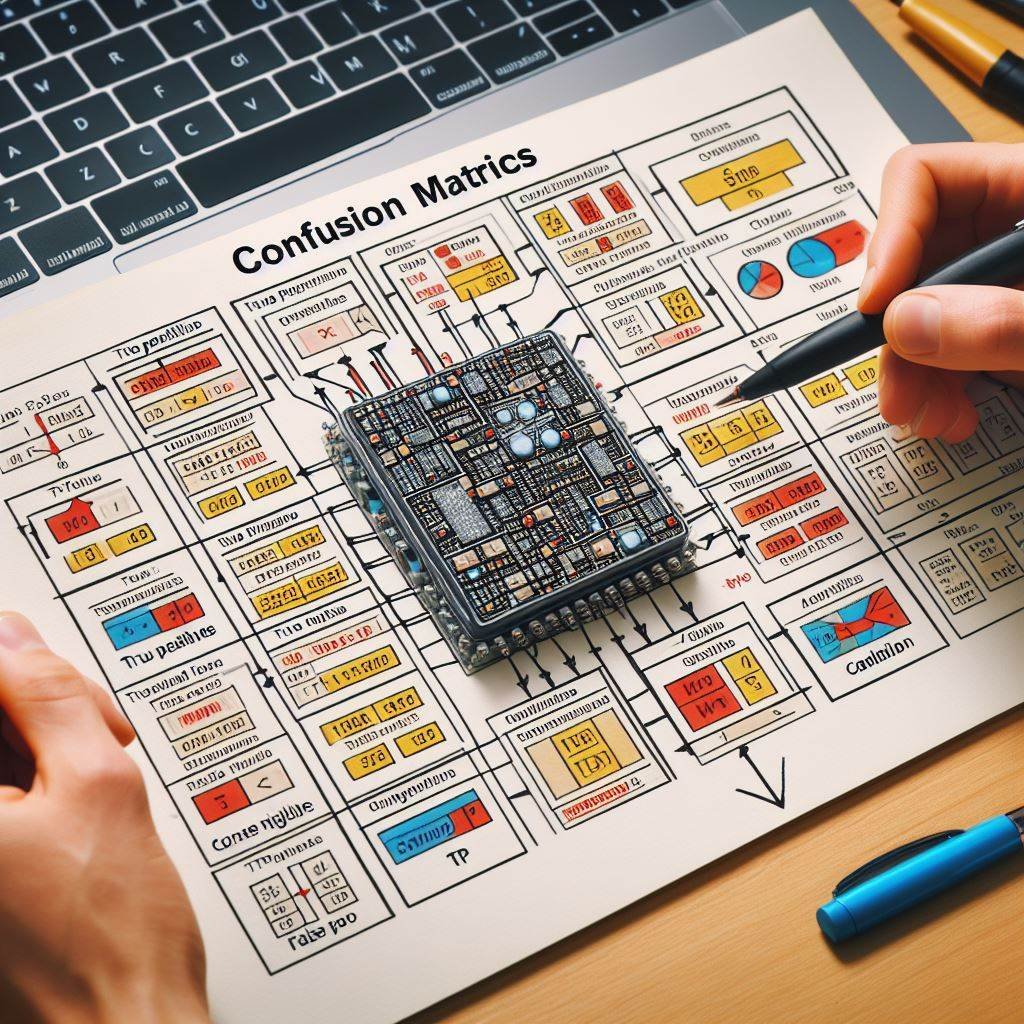

Using Confusion Matrices to Evaluate Machine Learning Models

Confusion matrices provide a comprehensive way to evaluate the performance of machine learning models, particularly in classification tasks. A confusion matrix is a table that summarizes the correct and incorrect predictions made by the model, presenting the following elements:

- True Positives (TP): Instances correctly classified as positive.

- True Negatives (TN): Instances correctly classified as negative.

- False Positives (FP): Instances incorrectly classified as positive.

- False Negatives (FN): Instances incorrectly classified as negative.

By analyzing the values in the confusion matrix, metrics such as precision, recall, and accuracy can be calculated, providing valuable insights into the model’s performance.

Evaluating Machine Learning Models in Classification Tasks

In classification tasks, where the goal is to assign instances to predefined categories, specific evaluation metrics and strategies are employed:

- Precision, Recall, and F1 Score: As discussed earlier, these metrics are particularly useful in classification problems, especially when the cost of false positives and false negatives varies.

- Area Under the Receiver Operating Characteristic (AUROC) Curve: The AUROC provides a measure of the model’s ability to distinguish between classes, taking into account the trade-off between true positive and false positive rates.

- Log Loss or Cross-Entropy Loss: These metrics quantify the uncertainty of the model’s predictions, with lower values indicating more confident and accurate predictions.

Evaluating Machine Learning Models in Regression Tasks

In regression tasks, where the goal is to predict continuous numerical values, different evaluation metrics and methods are used:

- Mean Squared Error (MSE): MSE measures the average squared difference between the predicted and actual values, making it sensitive to outliers.

- Root Mean Squared Error (RMSE): RMSE is the square root of MSE, providing a more interpretable measure of the typical magnitude of errors.

- Mean Absolute Error (MAE): MAE calculates the average absolute difference between predicted and actual values, making it less sensitive to outliers compared to MSE.

- Coefficient of Determination (R^2): R^2 measures the proportion of variance in the target variable that is explained by the model, with values closer to 1 indicating better fit.

Advanced Techniques for Evaluating Machine Learning Models

While the techniques mentioned above are widely used, there are several advanced methods for evaluating machine learning models:

- A/B Testing: In A/B testing, different models or configurations are deployed simultaneously, and their performance is compared using real-world data. This approach provides a practical evaluation of model performance in production environments.

- Bayesian Methods: Bayesian methods, such as Bayesian optimization and Gaussian processes, can be used to evaluate and optimize machine learning models while accounting for uncertainty in the data and model parameters.

- Model Interpretability: Techniques like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) provide insights into how models make predictions, allowing for a more comprehensive evaluation of model behavior and fairness.

Case Studies: Real-World Evaluations of Machine Learning Models

To illustrate the importance of model evaluation, let’s explore a few real-world scenarios:

- Fraud Detection: In the financial services industry, accurately detecting fraudulent transactions is critical. By evaluating models using precision, recall, and AUROC, companies can strike the right balance between flagging genuine transactions and identifying fraudulent activities.

- Predictive Maintenance: In manufacturing and industrial settings, predictive maintenance models are used to forecast equipment failures. Evaluating these models using metrics like MSE and MAE helps optimize maintenance schedules and minimize downtime.

- Recommendation Systems: E-commerce platforms and streaming services heavily rely on recommendation systems to provide personalized suggestions to users. Evaluating these models using techniques like A/B testing and model interpretability ensures that recommendations are relevant and fair.

Best Practices for Evaluating Machine Learning Models

To ensure effective and reliable model evaluation, it’s essential to follow best practices:

- Use Appropriate Evaluation Metrics: Select metrics that align with the problem domain and business objectives.

- Separate Training and Test Data: Evaluate models on unseen test data to obtain an unbiased estimate of performance.

- Employ Cross-Validation or Bootstrapping: Utilize techniques like cross-validation or bootstrapping to obtain more robust performance estimates.

- Understand Model Limitations: Recognize the assumptions and limitations of the evaluation metrics and techniques used.

- Monitor Performance Over Time: Continuously evaluate and monitor model performance in production environments to detect any performance degradation or concept drift.

- Interpret Results Carefully: Consider the practical implications of evaluation results and avoid over-optimizing for specific metrics at the expense of real-world utility.

Frequently Asked Questions (FAQs)

Q: Why is evaluating machine learning models important?

A: Evaluating machine learning models is crucial for assessing their performance, identifying strengths and weaknesses, and guiding the iterative process of model improvement. It helps ensure that models are reliable and effective in real-world applications.

Q: What are some common evaluation metrics for classification tasks?

A: Common evaluation metrics for classification tasks include accuracy, precision, recall, F1 score, and Area Under the Receiver Operating Characteristic (AUROC) curve.

Q: How does cross-validation help in evaluating machine learning models?

A: Cross-validation involves partitioning the data into subsets, training the model on one subset, and evaluating its performance on the remaining subset(s). This process is repeated multiple times, and the results are averaged, providing a more robust estimate of model performance.

Q: What is the purpose of using confusion matrices in model evaluation?

A: Confusion matrices provide a comprehensive way to evaluate the performance of machine learning models in classification tasks. They summarize the correct and incorrect predictions made by the model, allowing the calculation of metrics like precision, recall, and accuracy.

Q: What are some advanced techniques for evaluating machine learning models?

A: Advanced techniques for evaluating machine learning models include A/B testing, Bayesian methods, and model interpretability techniques like SHAP and LIME.

Conclusion

Evaluating machine learning models is a critical step in the development and deployment of reliable and effective solutions. By understanding the various evaluation techniques, metrics, and best practices, practitioners can make informed decisions and iteratively improve their models. Whether working on classification, regression, or other machine learning tasks, a comprehensive evaluation strategy is essential for ensuring model performance and reliability in real-world applications.