Artificial Hand Embedded System Using ML Fyp

In the field of biomedical engineering, the development of advanced prosthetic limbs has been a longstanding goal. With the advent of machine learning and embedded systems technologies, researchers have been able to create intelligent and adaptive artificial hands that can significantly improve the quality of life for individuals with upper limb amputations. This article will delve into the intricacies of an artificial hand embedded system that utilizes machine learning algorithms for prosthetic control.

Literature Review

Over the years, numerous studies have been conducted to explore the potential of machine learning in prosthetic limb control. Researchers have investigated various techniques, such as pattern recognition, neural networks, and reinforcement learning, to enhance the functionality and intuitive control of artificial hands. These studies have demonstrated promising results in terms of accurate gesture recognition, improved dexterity, and adaptation to user-specific requirements.

Project Objectives

The primary objectives of this artificial hand embedded system project are:

- To develop an intelligent and adaptive prosthetic hand that can mimic the natural movements and dexterity of a human hand.

- To integrate machine learning algorithms for intuitive and user-friendly control of the prosthetic hand.

- To incorporate advanced sensor technology for accurate gesture recognition and data acquisition.

- To design a robust and efficient embedded system architecture for real-time processing and actuation of the prosthetic hand.

Theoretical Background

Machine Learning for Prosthetic Control

Machine learning algorithms play a crucial role in the control and adaptation of prosthetic hands. These algorithms are trained on data collected from sensors and user inputs to learn and recognize patterns corresponding to different hand gestures and movements. Some of the commonly used machine learning techniques in prosthetic control include:

- Supervised Learning: Algorithms like Support Vector Machines (SVMs) and Artificial Neural Networks (ANNs) are trained on labeled data to recognize specific patterns and classify them into corresponding hand gestures or movements.

- Unsupervised Learning: Techniques like clustering and dimensionality reduction can be used to identify inherent patterns in sensor data without prior labeling, enabling the system to adapt to user-specific requirements.

- Reinforcement Learning: These algorithms learn from trial-and-error interactions with the environment, allowing the prosthetic hand to optimize its control strategies based on user feedback and performance metrics.

Embedded System Architecture

The embedded system architecture plays a crucial role in the integration and real-time operation of the artificial hand. It typically consists of the following components:

- Microcontroller or Embedded Processor: This is the core component responsible for executing the machine learning algorithms, processing sensor data, and controlling the actuators of the prosthetic hand.

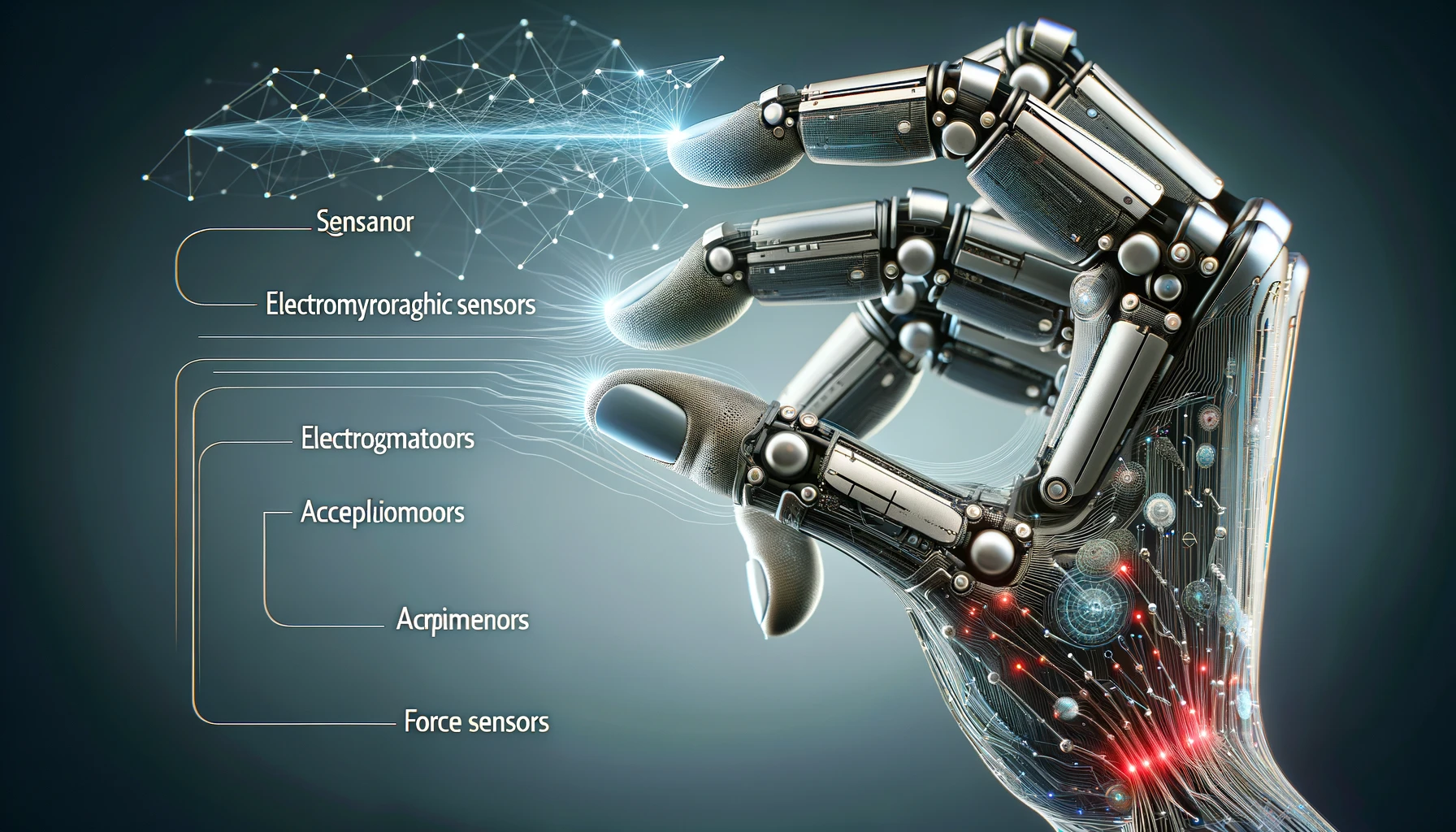

- Sensor Module: Various sensors, such as electromyography (EMG) sensors, accelerometers, gyroscopes, and force sensors, are integrated to capture user inputs and environmental data.

- Actuator Module: This module consists of motors, servos, or other actuators that translate the output of the machine learning algorithms into physical movements of the prosthetic hand.

- Power Management Unit: Efficient power management is essential for ensuring long-lasting operation of the embedded system.

- Communication Interface: This component facilitates communication between the embedded system and external devices, such as a computer or mobile app, for data transfer, monitoring, and configuration.

System Design and Architecture

The artificial hand embedded system can be divided into several key components:

- Sensor Integration and Data Acquisition

- Machine Learning Algorithms for Prosthetic Control

- Embedded System Development

- Testing and Evaluation

- Future Work and Improvements

1. Sensor Integration and Data Acquisition

The first step in developing the artificial hand embedded system involves integrating various sensors to capture user inputs and environmental data. Common sensors used in this project include:

- Electromyography (EMG) Sensors: These sensors measure the electrical activity of muscles, allowing the system to detect and interpret user intentions for hand gestures and movements.

- Accelerometers and Gyroscopes: These inertial sensors provide information about the orientation and motion of the prosthetic hand, enabling accurate tracking and control.

- Force Sensors: Integrated into the fingertips or palm of the prosthetic hand, force sensors can detect tactile feedback and grip force, improving dexterity and object manipulation.

The sensor data is preprocessed and fed into the machine learning algorithms for pattern recognition and gesture classification.

2. Machine Learning Algorithms for Prosthetic Control

The heart of the artificial hand embedded system lies in the machine learning algorithms responsible for interpreting sensor data and generating appropriate control signals for the actuators. Several algorithms can be employed, each with its own strengths and trade-offs:

- Support Vector Machines (SVMs): SVMs are powerful supervised learning algorithms that can effectively classify hand gestures based on labeled sensor data. They are robust to noise and can handle high-dimensional data.

- Artificial Neural Networks (ANNs): ANNs, particularly deep learning architectures like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), have shown remarkable performance in pattern recognition and time-series data analysis, making them suitable for prosthetic control tasks.

- Reinforcement Learning: Algorithms like Q-Learning and Deep Reinforcement Learning can be used to optimize the control strategies of the prosthetic hand by learning from user feedback and performance metrics, leading to adaptive and personalized control.

The choice of algorithm(s) depends on factors such as the complexity of the task, available training data, computational resources, and real-time performance requirements.

3. Embedded System Development

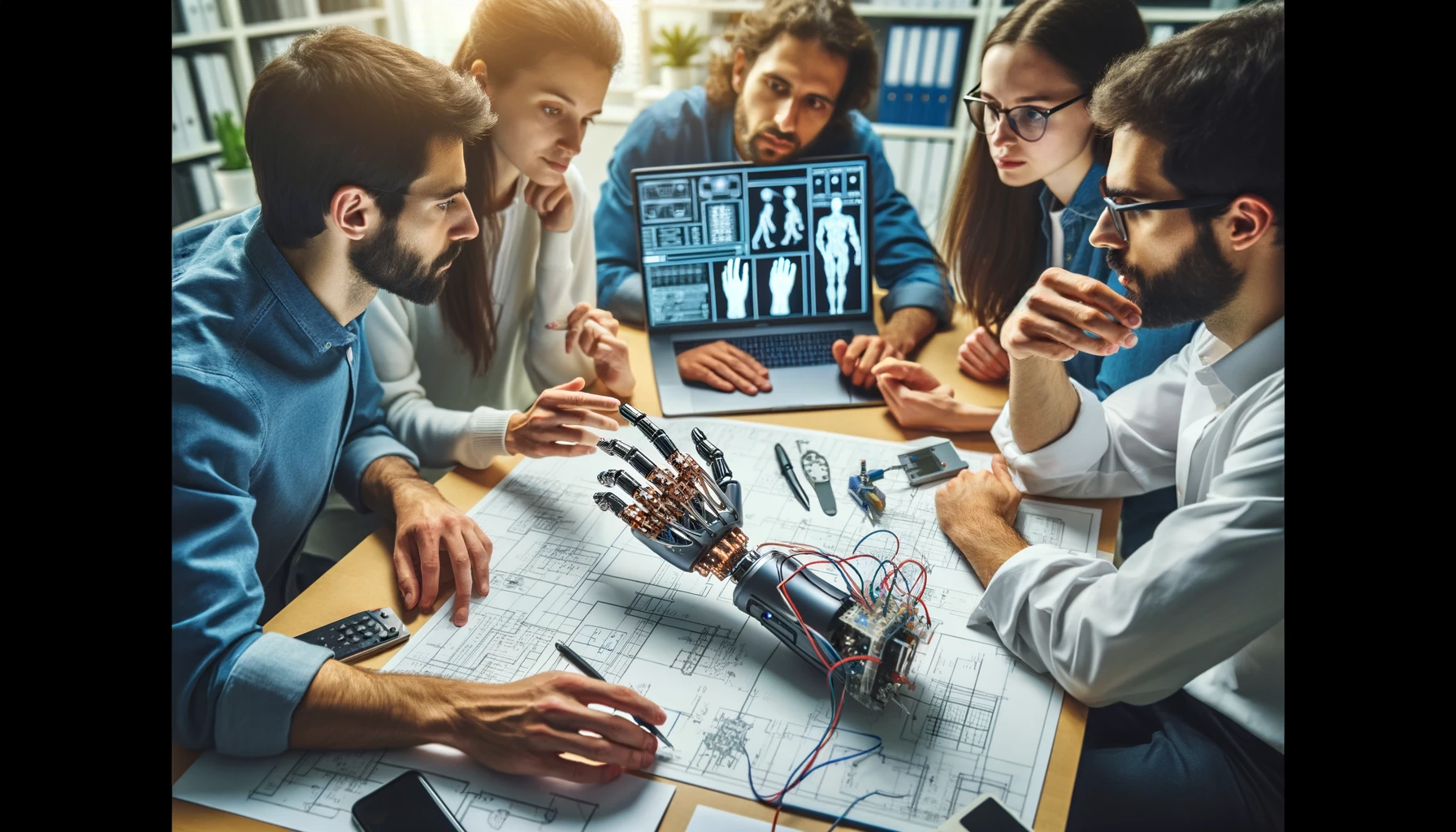

The embedded system development phase involves integrating the machine learning algorithms with the hardware components, including the microcontroller or embedded processor, sensor modules, actuator modules, power management unit, and communication interfaces.

The key steps in embedded system development include:

- Hardware Selection and Integration: Choosing the appropriate microcontroller or embedded processor based on computational requirements, power consumption, and interface compatibility. Integrating the selected hardware components and ensuring proper communication between them.

- Firmware Development: Writing the embedded software or firmware to implement the machine learning algorithms, handle sensor data acquisition, control the actuators, manage power, and facilitate communication with external devices.

- Real-time Operation and Optimization: Ensuring that the embedded system can perform real-time processing and actuation of the prosthetic hand while meeting performance and power constraints.

- User Interface and Control: Developing user-friendly interfaces, such as mobile apps or computer software, for configuring and monitoring the prosthetic hand’s performance.

4. Testing and Evaluation

Rigorous testing and evaluation are essential to ensure the reliability, safety, and effectiveness of the artificial hand embedded system. This phase involves:

- Benchtop Testing: Evaluating the performance of individual components, such as sensor accuracy, machine learning algorithm performance, and actuator response, under controlled laboratory conditions.

- User Studies: Conducting trials with human participants to assess the usability, comfort, and functionality of the prosthetic hand in real-world scenarios.

- Performance Metrics: Defining and measuring relevant performance metrics, such as gesture recognition accuracy, response time, battery life, and user satisfaction.

- Iterative Improvements: Based on the testing and evaluation results, making necessary adjustments and improvements to the system’s hardware, software, and machine learning algorithms.

5. Future Work and Improvements

The field of artificial hand embedded systems using machine learning is rapidly evolving, and there is significant potential for future work and improvements:

- Advanced Machine Learning Techniques: Exploring the application of cutting-edge machine learning techniques, such as transfer learning, federated learning, and meta-learning, to improve the adaptability and personalization of prosthetic control.

- Multi-modal Sensor Fusion: Integrating multiple sensor modalities, such as EMG, vision, and tactile feedback, to enhance the system’s perception and control capabilities.

- Improved Dexterity and Anthropomorphic Design: Developing more dexterous and anthropomorphic prosthetic hands with increased degrees of freedom and lifelike appearance and functionality.

- Cybernetic Integration: Investigating the potential of direct neural interfaces, such as brain-computer interfaces (BCIs), for intuitive and seamless control of prosthetic limbs.

- Power Management and Miniaturization: Improving power efficiency and miniaturizing the embedded system components to create more compact and long-lasting prosthetic devices.

Conclusion

The development of an artificial hand embedded system using machine learning represents a significant step forward in the field of biomedical engineering and prosthetic limb technology. By combining advanced machine learning algorithms, sensor integration, and embedded system design, researchers can create intelligent and adaptive prosthetic hands that closely mimic the natural movements and dexterity of a human hand. This technology has the potential to significantly improve the quality of life for individuals with upper limb amputations, enabling them to perform daily tasks with greater ease and independence. However, the successful implementation of such a system requires interdisciplinary collaboration among engineers, computer scientists, and medical professionals. Continuous research, testing, and iterative improvements are necessary to overcome challenges and ensure the reliability, safety, and user-friendliness of these devices. As technology continues to advance, the future of artificial hand embedded systems using machine learning holds immense promise, paving the way for more sophisticated and seamless human-machine interfaces, enhanced dexterity, and personalized prosthetic solutions.

FAQs

What are the main advantages of using machine learning algorithms in prosthetic hand control?

Machine learning algorithms can provide intuitive and adaptive control of prosthetic hands by recognizing complex patterns in sensor data and adjusting to user-specific requirements. This can lead to improved dexterity, natural movements, and a better user experience.

How does the embedded system architecture facilitate real-time operation and control of the prosthetic hand?

The embedded system architecture integrates the machine learning algorithms with the hardware components, such as microcontrollers, sensors, and actuators. It enables real-time processing of sensor data, execution of control algorithms, and actuation of the prosthetic hand, ensuring smooth and responsive operation.

What are some common sensors used in artificial hand embedded systems?

Common sensors used in these systems include electromyography (EMG) sensors for detecting muscle activity, accelerometers and gyroscopes for tracking motion and orientation, and force sensors for measuring grip force and tactile feedback.

How are machine learning algorithms trained for prosthetic hand control?

Machine learning algorithms can be trained using supervised learning techniques, where labeled sensor data corresponding to different hand gestures or movements is used to train the algorithms to recognize patterns. Reinforcement learning algorithms can also be employed to optimize control strategies based on user feedback and performance metrics.

What are some potential future improvements in artificial hand embedded systems using machine learning?

Future improvements may include advanced machine learning techniques like transfer learning and meta-learning for better adaptation, multi-modal sensor fusion for enhanced perception, improved dexterity and anthropomorphic design, direct neural interfaces for intuitive control, and power management and miniaturization for more compact and long-lasting devices.