Neuromorphic computing

Unlocking the Future: A Dive into Neuromorphic Computing

Introduction to Neuromorphic Computing

In the rapidly evolving landscape of technology, neuromorphic computing emerges as a revolutionary approach, mimicking the human brain’s neural structure and functionality. This computing paradigm seeks to transcend traditional computing limitations by enabling machines to process information in ways akin to biological systems. Such advancements hold the promise of significant strides in efficiency, speed, and learning capabilities.

Understanding Neuromorphic Computing

The Basics

Neuromorphic computing involves the design of electronic systems inspired by the human nervous system’s architecture. It integrates principles from various disciplines, including neuroscience, physics, and computer science, aiming to replicate the brain’s efficiency in cognitive tasks and sensory processing.

Key Features

- Energy Efficiency: Consumes significantly less power compared to conventional computing systems.

- Real-time Processing: Capable of processing information rapidly and in parallel, similar to the human brain.

- Adaptability: Exhibits remarkable adaptability to learn from new data, improving over time.

- Fault Tolerance: Maintains functionality even when components fail, mirroring the brain’s resilience.

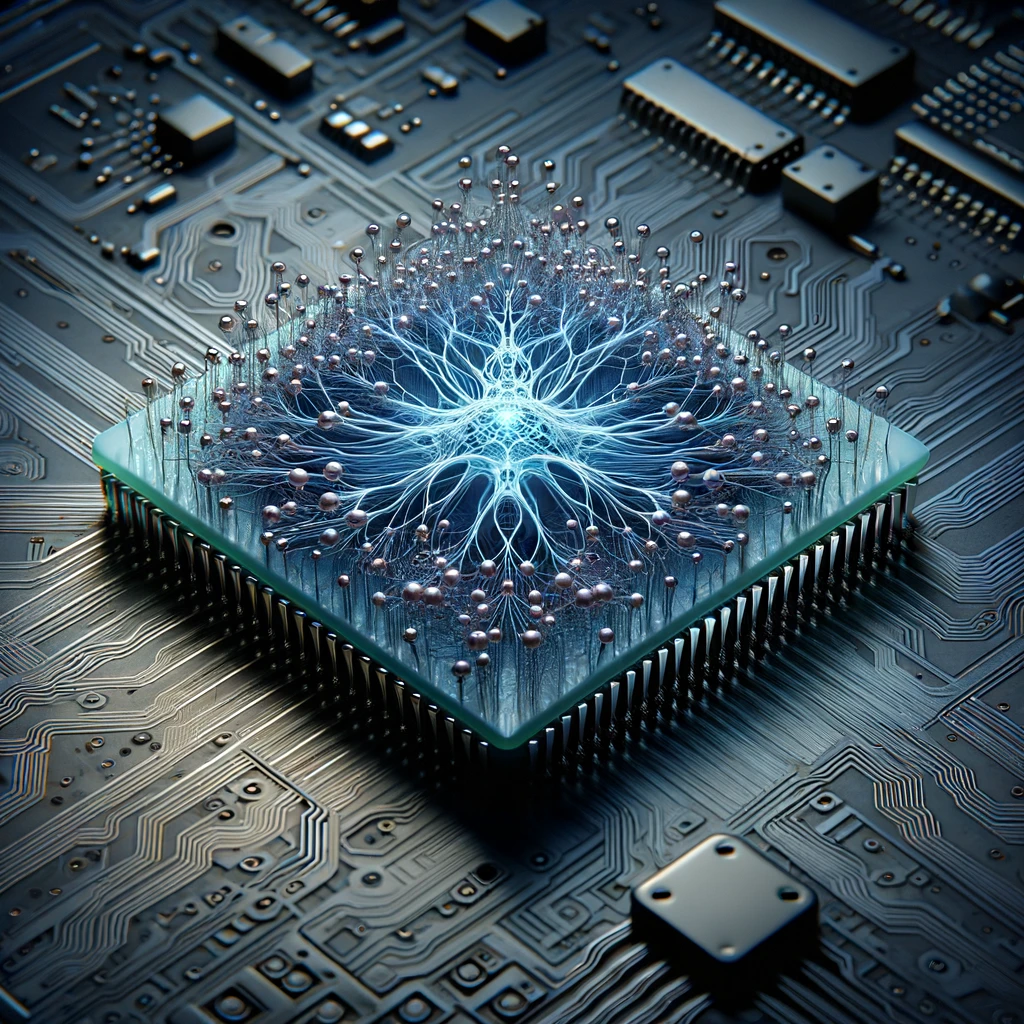

Neuromorphic Computing Architecture

The architecture of neuromorphic computing systems is fundamentally different from traditional computers. It consists of networks of artificial neurons and synapses that can learn and adapt through changes in their connections, akin to learning processes in the brain.

Components

- Neurons: Act as processing units, mimicking the brain’s neurons.

- Synapses: Serve as connections between neurons, facilitating the transfer and modification of signals.

Applications and Jobs

Neuromorphic computing finds applications in areas requiring complex cognitive functions, such as:

- Robotics: Enhancing robots with the ability to learn from their environment.

- Autonomous Vehicles: Improving decision-making in real-time driving conditions.

- Healthcare: Advancing diagnostic tools and personalized medicine.

The field also offers burgeoning career opportunities, ranging from research and development to specialized hardware design and application development.

Education and Resources

Learning Opportunities

- Courses: A variety of courses are available, covering topics from the basics of neuromorphic computing to advanced design and applications.

- Books and Journals: Numerous resources offer in-depth insights, including textbooks, research papers, and dedicated journals.

Neuromorphic Computing in 2023 and Beyond

With continuous research and development, neuromorphic computing is set to make significant advancements. The field is evolving, with new architectures, more efficient chips, and broader applications expected to emerge.

FAQs

Q: How does neuromorphic computing differ from traditional computing?

A: Neuromorphic computing mimics the brain’s neural network, focusing on parallel processing and energy efficiency, unlike traditional computing’s sequential processing approach.

Q: Can neuromorphic chips replace current CPUs and GPUs?

A: While neuromorphic chips offer unique advantages, they are not meant to replace CPUs and GPUs but rather complement them by handling tasks requiring neural network processing more efficiently.

Q: What are the challenges facing neuromorphic computing?

A: Major challenges include scalability, the complexity of design and fabrication, and creating algorithms suited for its unique architecture.

Q: Where can I study neuromorphic computing?

A: Many universities and online platforms offer courses ranging from introductory to advanced levels, along with specialized programs for research and development.

Conclusion

Neuromorphic computing stands at the forefront of technological innovation, offering a glimpse into a future where machines can learn and process information with unprecedented efficiency. As research progresses, the potential applications of neuromorphic systems are vast and varied, promising to revolutionize industries from healthcare to autonomous vehicles. For those intrigued by the intersection of computing and neuroscience, the journey into neuromorphic computing offers a challenging yet rewarding path, teeming with opportunities for exploration and discovery.