Speech Emotion Recognition With Librosa

Speech Emotion Recognition (SER) involves detecting and interpreting human emotions from speech signals. This technology has a broad range of applications, including customer service, mental health monitoring, and human-computer interaction. Understanding the emotions in speech can enhance user experiences by making interactions more natural and effective. Speech Emotion Recognition with librosa leverages the capabilities of this powerful Python library to process and analyze audio data effectively.

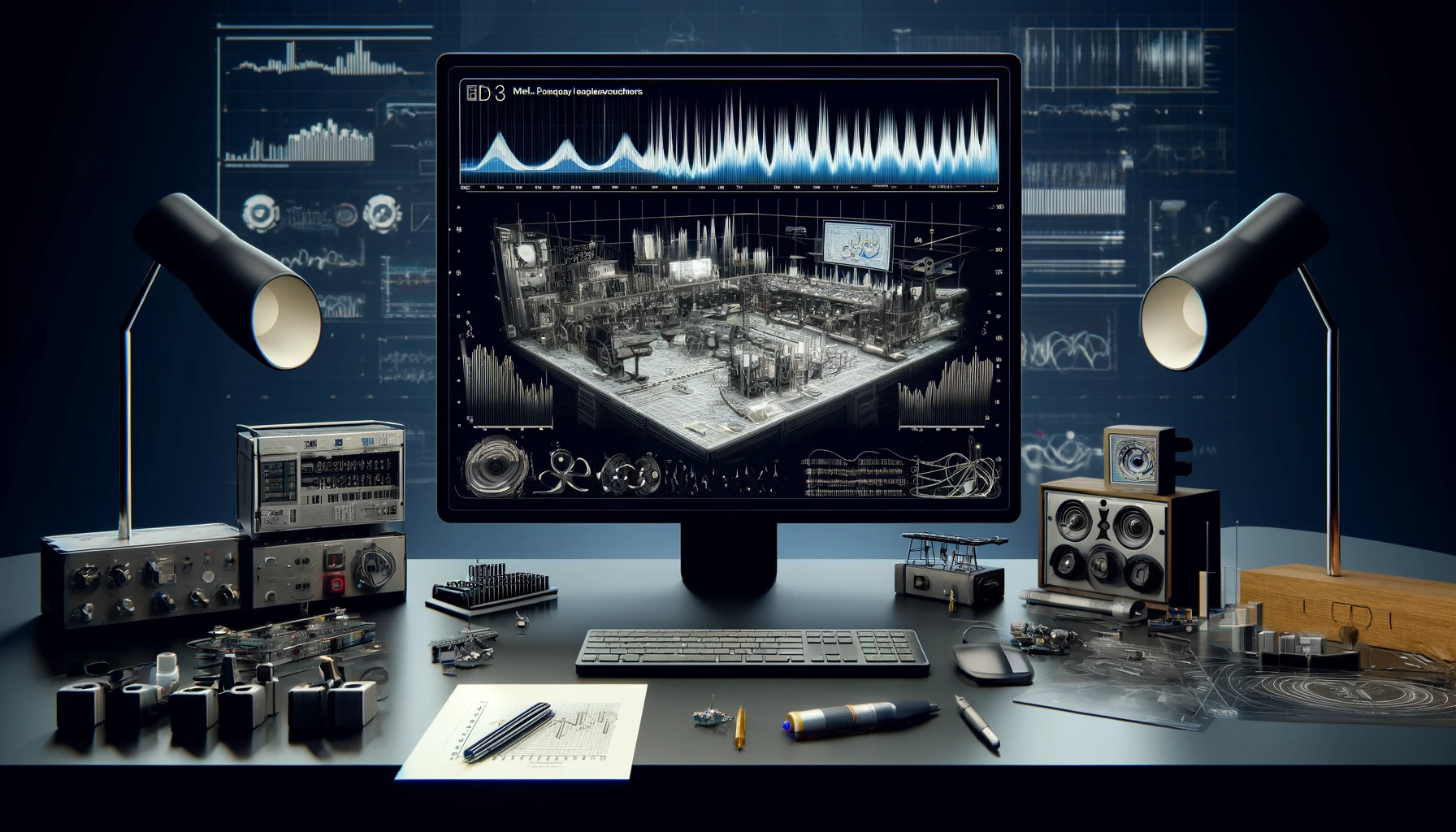

What is Librosa?

librosa is a powerful Python library widely used for audio and music analysis. It provides a comprehensive suite of tools for working with audio data, including functions for reading, transforming, and analyzing audio signals. Its relevance to SER lies in its ability to extract meaningful features from audio, which are crucial for emotion recognition.

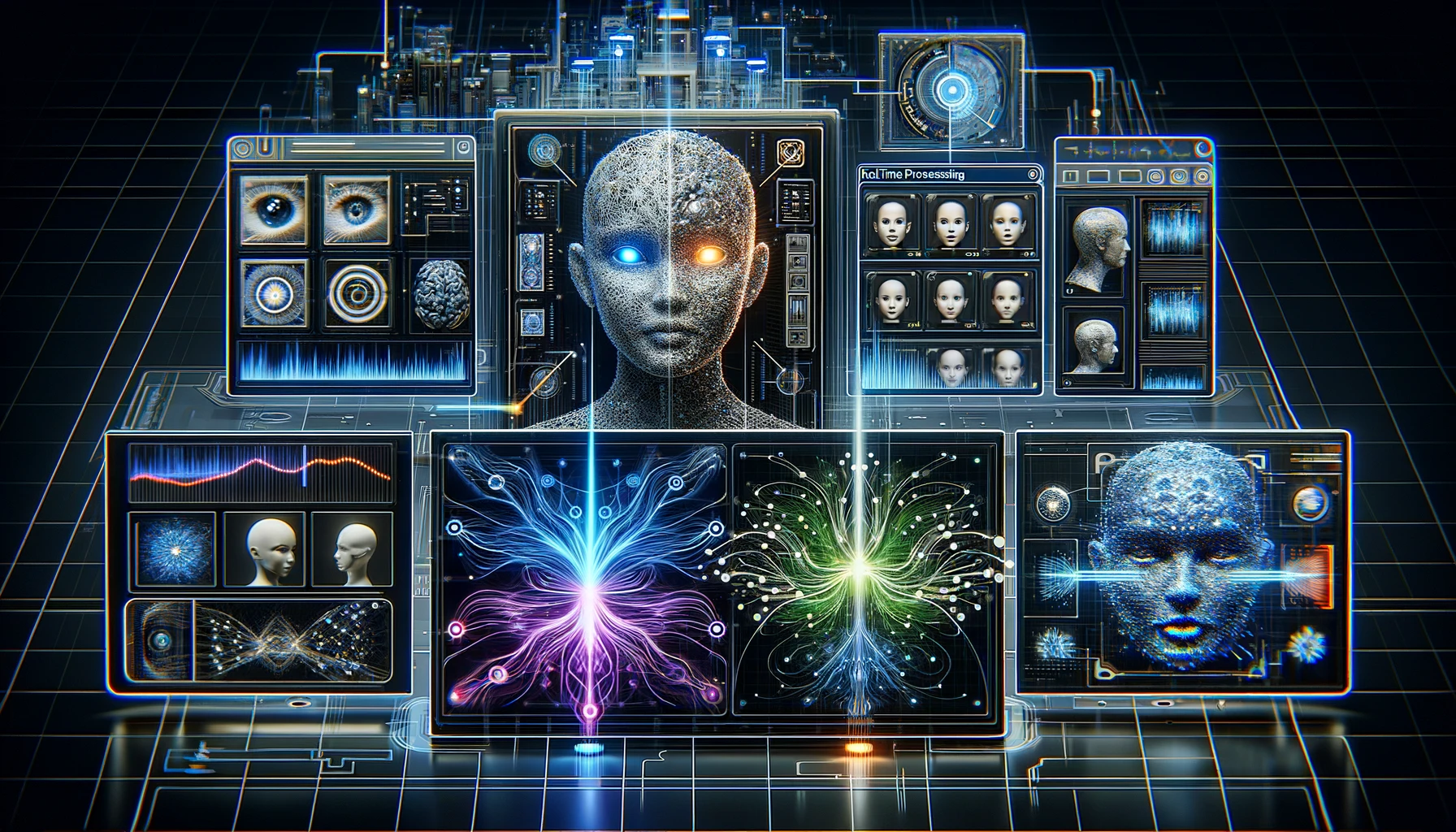

The Science Behind Speech Emotion Recognition

SER leverages various principles and techniques from fields like signal processing, machine learning, and neuroscience. The core idea is to analyze the acoustic properties of speech—such as pitch, tone, and rhythm—to infer emotional states. These properties are often represented through features that machine learning models can process to make predictions.

Setting Up Your Environment for Speech Emotion Recognition with Librosa

Step-by-Step Guide to Installing Librosa and Other Libraries

- Install Python: Ensure Python is installed on your system. Python 3.6 or higher is recommended.

- Set up a virtual environment: This isolates your project dependencies.

python -m venv ser_env source ser_env/bin/activate - Install librosa:

pip install librosa - Install additional libraries:

pip install numpy scipy scikit-learn matplotlib

Key Features of librosa for Speech Emotion Recognition

librosa offers several features that make it ideal for SER:

- Audio loading: Easy loading of audio files in various formats.

- Time-domain and frequency-domain analysis: Tools for analyzing audio signals.

- Feature extraction: Functions to extract features like Mel-Frequency Cepstral Coefficients (MFCCs), chroma features, and spectral contrast.

- Visualization: Tools for visualizing audio data and extracted features.

Data Collection and Preparation

Best Practices for Collecting and Preparing Audio Data for Emotion Recognition

- Source quality data: Ensure your audio samples are high-quality and diverse.

- Annotate emotions: Label your data with the corresponding emotions.

- Normalize audio: Ensure consistent volume levels across samples.

- Split data: Divide your data into training, validation, and test sets.

Feature Extraction with librosa

Feature extraction is a critical step in SER. It involves transforming raw audio data into numerical features that a machine learning model can use.

import librosa

# Load an audio file

y, sr = librosa.load('audio.wav')

# Extract MFCC features mfccs = librosa.feature.mfcc(y=y, sr=sr, n_mfcc=13)Understanding MFCCs in Speech Emotion Recognition

Explaining Mel-Frequency Cepstral Coefficients (MFCCs) and Their Role in Emotion Recognition

MFCCs are coefficients that collectively describe the short-term power spectrum of a sound. They are widely used in speech and audio processing because they can capture the timbral aspects of speech, which are crucial for distinguishing different emotions.

Implementing Speech Emotion Recognition with librosa

A Detailed Tutorial on Building a Speech Emotion Recognition System Using librosa

- Load and preprocess data: Use librosa to load audio files and extract features.

- Train a machine learning model: Use scikit-learn or similar libraries to train a classifier on the extracted features.

- Evaluate the model: Assess the model’s performance using metrics like accuracy, precision, and recall.

from sklearn.model_selection import train_test_split

from sklearn.svm import SVC

from sklearn.metrics import accuracy_score

# Split data

X_train,X_test,y_train,y_test=train_test split(features,labels,test_size=0.2)

# Train model

model = SVC() model.fit(X_train, y_train)

# Evaluate model

y_pred = model.predict(X_test)

print(f'Accuracy: {accuracy_score(y_test, y_pred)}')Training Your Model for Emotion Recognition

Techniques and Tips for Training a Machine Learning Model to Recognize Emotions in Speech

- Use balanced datasets: Ensure all emotions are equally represented.

- Apply data augmentation: Enhance your dataset with variations of existing samples.

- Tune hyperparameters: Optimize your model by tuning parameters like kernel type for SVM.

Evaluating the Performance of Your Emotion Recognition Model

How to Assess the Accuracy and Effectiveness of Your Speech Emotion Recognition System

- Confusion Matrix: Visualize the performance of your model across different emotion classes.

- Cross-validation: Use cross-validation to ensure your model’s robustness.

from sklearn.metrics import confusion_matrix

import matplotlib.pyplot as plt

import seaborn as sns

# Compute confusion matrix

cm = confusion_matrix(y_test, y_pred)

# Plot confusion matrix

sns.heatmap(cm, annot=True, fmt='d')

plt.xlabel('Predicted')

plt.ylabel('True')

plt.show()Common Challenges in Speech Emotion Recognition

Identifying and Addressing Common Issues Faced in Emotion Recognition Projects

- Data imbalance: Mitigate by augmenting underrepresented classes.

- Noise in data: Use noise reduction techniques to clean your audio samples.

- Overfitting: Prevent by using techniques like dropout and regularization.

Improving Accuracy with Data Augmentation

Using Data Augmentation Techniques to Enhance the Performance of Your Emotion Recognition System

- Time-stretching: Alter the speed of audio without changing the pitch.

- Pitch shifting: Change the pitch of audio without altering the speed.

- Adding noise: Introduce random noise to make the model robust to real-world conditions.

import librosa.effects

# Time-stretching

y_stretch = librosa.effects.time_stretch(y, rate=1.2)

# Pitch shifting y_shift = librosa.effects.pitch_shift(y, sr, n_steps=4)Real-World Applications of Speech Emotion Recognition

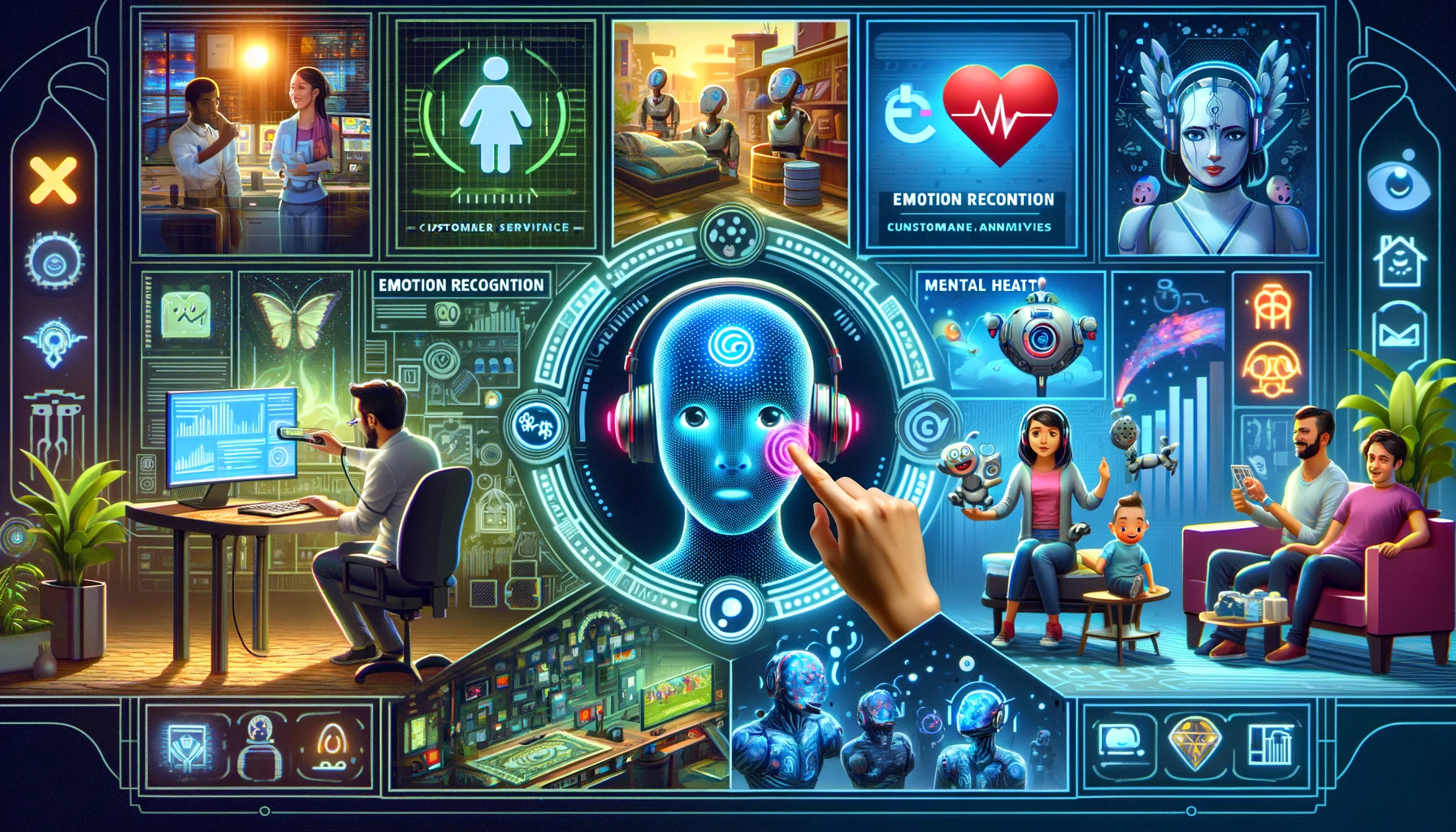

Exploring Various Applications and Industries Where Speech Emotion Recognition is Making an Impact

- Customer service: Enhance customer interactions by detecting emotions.

- Mental health: Monitor patients’ emotional states for better mental health care.

- Entertainment: Create more engaging and responsive gaming experiences.

Future Trends in Speech Emotion Recognition

Looking Ahead at Emerging Trends and Technologies in the Field of Speech Emotion Recognition

- Deep learning: Utilizing advanced neural networks for improved accuracy.

- Real-time processing: Developing systems capable of recognizing emotions in real-time.

- Multimodal emotion recognition: Combining speech with other modalities like facial expressions and body language.

Resources and Further Reading

Providing a List of Resources for Readers Who Want to Dive Deeper into Speech Emotion Recognition with librosa

- Books: “Deep Learning for Natural Language Processing” by Palash Goyal

- Online Courses: Coursera’s “Audio Signal Processing for Music Applications”

- Research Papers: “Speech Emotion Recognition Using Deep Learning Techniques” (IEEE)

Conclusion: Harnessing the Power of Speech Emotion Recognition with librosa

librosa provides a robust toolkit for building SER systems, enabling applications that can transform various industries. By leveraging its features and integrating advanced machine learning techniques, we can create systems that accurately and efficiently recognize emotions in speech, paving the way for more intuitive and human-like interactions.

FAQs

Q1: What is Speech Emotion Recognition?

A1: Speech Emotion Recognition is the process of identifying and interpreting emotions from speech signals using various audio processing and machine learning techniques.

Q2: Why use librosa for SER?

A2: librosa is a versatile and powerful Python library for audio analysis, offering numerous features for extracting and processing audio features crucial for SER.

Q3: What are MFCCs?

A3: Mel-Frequency Cepstral Coefficients are features that represent the power spectrum of a sound and are widely used in speech and audio processing for their ability to capture timbral properties.

Q4: How can I improve the accuracy of my SER system?

A4: Improve accuracy by using balanced datasets, applying data augmentation techniques, and optimizing your machine learning model’s hyperparameters.

Q5: What are some real-world applications of SER?

A5: Real-world applications include customer service, mental health monitoring, and interactive entertainment systems.